06 Jan 2015

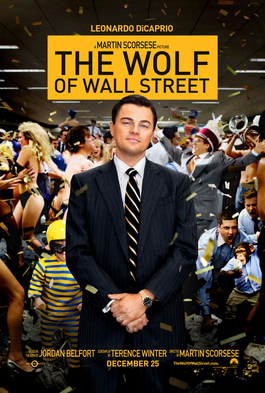

What impressed me most about this film is that the book it was based on was written by Jordan Belfort, further proving that you can get rich writing a book about getting rich. Although the gratuitous nudity and drug use make it difficult to publicly recommend, the charm of a rags to riches story is certainly enhanced here by revisiting the rags, and the trip back and forth is conveyed with plenty of style.

I felt Leonardo DiCaprio’s performance was judged too harshly by those saying he over acted in hopes of sealing an academy award in his rendition of Jordan Belfort. The beginning of the film, which features his character working through a brief stage of worldly naivety, is completely convincing and serves as a stark contrast to the middle of the film, which left him barely recognizable in my eyes. That alone demonstrates his versatility in portraying both the pedestrian sections of the script, as well as the “Oscar baiting” scenes.

In terms of what could be improved, the time ran a bit too long at 3 hours. It may have been served some favors by reducing a few of the many pep rallies that went above and beyond in establishing the mood of a money-hungry investment firm. I found myself re-watching parts of the film, but consistently skipping those scenes in particular (or at least large parts of them). I also felt the connection between Matthew McConaughey’s character and Leonardo DiCaprio’s character was too thin to keep re-establishing throughout the film. Perhaps if his mentorship had grown past anything but a single drug-fueled lunch, I would have less of a problem believing the impact it had on Jordan’s development throughout the film.

02 Nov 2014

Most github users are aware of tagging issues and pull requests to organize them better. Some also know that it’s a good idea to create your own tags to suit your team’s individual needs. Our team uses some tags that help deal with communication in larger projects with many collaborators. They’re not the usual permanent issue labels, but rather, stateful indicators that alert teammates what the status of a pull request is at a glance.

The Background

Like many experienced git users, I make branches for nearly everything. And as an experienced git user in a team-based environment, I tend to stagger those branches to introduce large changes in the following order.

- Outline the impact, typically by changing the directory structure.

- Submit blank tests that serve as a rough contract of the change.

- Write code that would allow the tests to pass.

- Write tests.

The advantages of this approach are huge. Pull requests generally hover around ~200 lines added or removed at any given time. Each phase of the full change never breaks any build automation, and if it does, there’s an opportunity to discuss the value, the approach, or the technique used at that point, or at any point before it. You can also get a good idea of what needs to happen in subsequent pull requests as you go along. For me, having 700 or 900 lines of code changes in a single pull request is unacceptable (unless you’re checking in machine generated data).

The downsides are pretty obvious too — both myself and the reviewer are in charge of juggling possibly four or more pull requests all at once. If there’s a typo in the second pull request, I’ll have to introduce it there, and rebase all following branches on it again. This can lead to excessive force-pushing, and extra communication with any reviewers who like to check out the code locally as they review.

The Summary

One of the main issues I had with this workflow involved the previously mentioned communication overhead. I’d see three or four of my own pull requests open, and four comments split up over two of them. I’d need to constantly remind myself which branch needed what changes, especially for routine tasks like rebasing against master. We discussed the idea of having tags that don’t represent the essence of an entire issue, but rather, the current state of an open pull request.

Eventually we agreed to add the following tags. They’ve been very helpful for us, and you should consider adding them to your project as well.

- Pull Request Reviewed with Comments

A persistent “you’ve got mail” for the pull request. Once cleared, this means the submitter has responded to comments.

- Pull Request Merge on CI/CD Passes

Helpful for when you want to let everyone know that the pull request is good, and no other actions need to be taken once the automation runs with a clean exit. I’ve had a great time merging two or three pull requests I knew nothing about this way.

- Pull Request Needs Rebase

For when a pull request stays open too long and another pull request has been merged ahead of it that makes a conflict.

- Pull Request Needs Squash

Lets the pull request owner know that they’ve addressed reviewer feedback successfully, and need to tidy up the commit log by melding all commits into one.

Once any of the above tasks are completed, the submitter removes the tag.

Aside from those temporary tags, here are other handy “traditional” tags to add to your github pull requests.

A signal for other reviewers to weigh in on a possible disruptive change to the codebase.

Subjectively applied to pull requests that will likely be prioritized due to its size. It’s nice to know which pull requests will take 5 minutes to review, and which will take 5 hours.

Makes it more apparent to those who review your code locally to refresh their third party dependencies before trying to vet the changes.

Introduces a backwards-incompatible change to anyone depending on the code featured in the pull request.

30 Aug 2014

If you use Travis CI to run your end to end tests, you may want to start with something simple and run a local selenium server to communicate with the browser. Although SauceLabs is a really powerful tool for mature, cross-browser projects with solid testing procedures, it can be a bit of a burden to sign up for, pay for, and configure it for Travis CI runs. For something like the project I’m working on, we only care about testing against a modern install of Firefox, and doing this is as simple as adding the following lines to your project’s .travis.yml file.

language: node_js

node_js:

- '0.10'

before_install: npm install -g protractor@1.0.0 mocha@1.18.2

install:

- npm install

- webdriver-manager update --standalone

before_script:

- export DISPLAY=:99.0

- sh -e /etc/init.d/xvfb start

- webdriver-manager start &

script:

- protractor protractor.conf.js

This is a bare-bones setup for running (free) end to end tests using only the Travis VM, and on first glance appears normal. And you’d be right thinking this. Although that last command does start a background selenium server, it has an awful side effect of polluting the logs with output containing javascript that runs against the browser during the test run.

For example, here is just one of the many tests that run on every pull request of the encore-ui project I’ve been contributing to lately.

rxDiskSize

17:01:46.133 INFO - Executing: [get: data:text/html,<html></html>])

17:01:46.168 INFO - Done: [get: data:text/html,<html></html>]

17:01:46.175 INFO - Executing: [execute script: window.name = "NG_DEFER_BOOTSTRAP!" + window.name;window.location.replace("https://localhost:9001/#/component/rxDiskSize");, []])

17:01:46.231 INFO - Done: [execute script: window.name = "NG_DEFER_BOOTSTRAP!" + window.name;window.location.replace("https://localhost:9001/#/component/rxDiskSize");, []]

17:01:46.261 INFO - Executing: [execute script: return window.location.href;, []])

17:01:46.520 INFO - Done: [execute script: return window.location.href;, []]

17:01:46.555 INFO - Executing: [execute async script: try { return (function (attempts, asyncCallback) {

var callback = function(args) {

setTimeout(function() {

asyncCallback(args);

}, 0);

};

var check = function(n) {

try {

if (window.angular && window.angular.resumeBootstrap) {

callback([true, null]);

} else if (n < 1) {

if (window.angular) {

callback([false, 'angular never provided resumeBootstrap']);

} else {

callback([false, 'retries looking for angular exceeded']);

}

} else {

window.setTimeout(function() {check(n - 1);}, 1000);

}

} catch (e) {

callback([false, e]);

}

};

check(attempts);

}).apply(this, arguments); }

catch(e) { throw (e instanceof Error) ? e : new Error(e); }, [10]])

17:01:46.567 INFO - Done: [execute async script: try { return (function (attempts, asyncCallback) {

var callback = function(args) {

setTimeout(function() {

asyncCallback(args);

}, 0);

};

var check = function(n) {

try {

if (window.angular && window.angular.resumeBootstrap) {

callback([true, null]);

} else if (n < 1) {

if (window.angular) {

callback([false, 'angular never provided resumeBootstrap']);

} else {

callback([false, 'retries looking for angular exceeded']);

}

} else {

window.setTimeout(function() {check(n - 1);}, 1000);

}

} catch (e) {

callback([false, e]);

}

};

check(attempts);

}).apply(this, arguments); }

catch(e) { throw (e instanceof Error) ? e : new Error(e); }, [10]]

17:01:46.580 INFO - Executing: [execute script: angular.resumeBootstrap(arguments[0]);, [[]]])

17:01:46.990 INFO - Done: [execute script: angular.resumeBootstrap(arguments[0]);, [[]]]

17:01:47.022 INFO - Executing: [execute async script: try { return (function (selector, callback) {

var el = document.querySelector(selector);

try {

angular.element(el).injector().get('$browser').

notifyWhenNoOutstandingRequests(callback);

} catch (e) {

callback(e);

}

}).apply(this, arguments); }

catch(e) { throw (e instanceof Error) ? e : new Error(e); }, [body]])

17:01:47.032 INFO - Done: [execute async script: try { return (function (selector, callback) {

var el = document.querySelector(selector);

try {

angular.element(el).injector().get('$browser').

notifyWhenNoOutstandingRequests(callback);

} catch (e) {

callback(e);

}

}).apply(this, arguments); }

catch(e) { throw (e instanceof Error) ? e : new Error(e); }, [body]]

17:01:47.059 INFO - Executing: [find elements: By.selector: .component-demo ul li])

17:01:47.071 INFO - Done: [find elements: By.selector: .component-demo ul li]

17:01:47.101 INFO - Executing: [get text: 36 [[FirefoxDriver: firefox on LINUX (54c8e519-0211-45d5-a1f2-64bb2526e652)] -> css selector: .component-demo ul li]])

17:01:47.114 INFO - Done: [get text: 36 [[FirefoxDriver: firefox on LINUX (54c8e519-0211-45d5-a1f2-64bb2526e652)] -> css selector: .component-demo ul li]]

✓ should still have 420 GB as test data on the page

Even though I feel it goes without saying, this kind of output is not something I look forward to seeing, especially when my end to end tests are breaking the build. In fact, many of the pull request test runs wind up maxing out the logs at 10000 lines, potentially killing all ability to debug a broken test. This issue can compound when faced with a bug that isn’t reproducible on my machine, but appears to break in the CI environment.

Fortunately, you can literally change one line in your project’s .travis.yml file to prevent this sort of behavior.

before_script:

- export DISPLAY=:99.0

- sh -e /etc/init.d/xvfb start

# Immune to logouts, but not VM deprovisions!

- nohup bash -c "webdriver-manager start 2>&1 &"

The end result is a clean, informative test run report that looks just the one you saw on your development machine before you pushed up your branch (at least, hopefully you ran the end to end tests before you pushed up your branch).

$ protractor protractor.conf.js

Using the selenium server at https://localhost:4444/wd/hub

rxDiskSize

✓ should still have 420 GB as test data on the page

✓ should convert 420 GB back to gigabytes

✓ should still have 125 TB as test data on the page

✓ should convert 125 TB back to gigabytes

✓ should still have 171.337 PB as test data on the page

✓ should convert 171.337 PB back to gigabytes

✓ should still have 420 GB as test data on the page

✓ should convert 420 GB back to gigabytes

✓ should still have 125 TB as test data on the page

✓ should convert 125 TB back to gigabytes

✓ should still have 171.337 PB as test data on the page

✓ should convert 171.337 PB back to gigabytes

12 passing (3s)

The command "protractor protractor.conf.js" exited with 0.

Full disclosure: I found this helpful tip while browsing codeship.io’s excellent documentation, which provides a service similar to Travis CI, and is also my personal preference for my side projects. However, this article was written for those who, like myself, find themselves encountering Travis CI in a project that they don’t control.

18 Aug 2014

The free CICD site drone.io is a really useful, lightweight replacement for Jenkins for free, open source repositories. Being purpose built for just one thing means setting up a new project takes literally a tenth of the time compared to Jenkins.

But one thing it is lacking is the ability to run CICD tests against new pull requests that have had master merged into it first, and updating the status in the pull request while it’s doing that. Most Google searches for help will uncover forum posts with users requesting this feature, followed by promises from the drone.io team to get around to adding this functionality in “six to eight weeks”.

Fortunately, you can roll these things out yourself. Just follow these steps:

- Create an OAuth Token from your github user profile.

- You only need to enable the

public_repo and repo:status scopes.

- In your drone.io settings page, paste the token you got from github into the “Environment Variables” textbox.

For example:

- Next, add some code that will allow you to replicate the behavior that is available in other CICD providers, such as TravisCI.

function postStatus {

curl -so /dev/null -X POST -H "Authorization: token $OAUTH_TOKEN" -d "{\"state\": \"$1\", \"target_url\": \"$DRONE_BUILD_URL\", \"description\": \"Built and tested on drone.io\", \"context\": \"Built and tested on drone.io\"}" https://api.github.com/repos/:YOUR_NAME:/:YOUR_REPO:/statuses/$DRONE_COMMIT;

}

postStatus pending

git merge master $DRONE_BRANCH

if [ $? -ne "0" ]; then

echo "Unable to merge master..."

postStatus error

exit 1

fi

Finally, add code for your specific project to actually do some testing. Here’s a simple example that you might use for a node app.

npm install --loglevel=warn

if [ $? -ne "0" ]; then

postStatus error

exit 1

fi

npm test

if [ $? -eq "0" ]; then

postStatus success

else

postStatus failure

fi

That’s it! Once your vanilla setup with drone.io is set up, everything else should just work.

10 Aug 2014

On Friday, I had finished my tasks for the day a little early and decided to check up on the co-workers around me, just to see if any of them had anything blocking them, or anything interesting they’d like to show off to me. It turns out one had been stuck on an issue for the last 45 minutes. He had at least fifteen tabs open, sighed a lot, and was visibly exhausted. Basically, he was sending about a clear a signal as one can that they’re debugging some odd behavior. The issue at hand revolved around a really pleasant function, as far as troubleshooting goes.

var transformUser = function (users) {

return _.omit(users, '_links');

};

Suddenly, this function had started returning a near empty (but successful) response on staging. Nobody was sure why, and seeing how his team had promoted a build to staging earlier that day, he had been digging deep into his code base, and its dependencies, trying to find the culprit.

I immediately asked him to show me what the correct response should look like. He made a call directly to the upstream api using Postman, and returned a list of users that would be passed into this function. This was the exact same call his app was making. So, we copied the JSON payload, ssh’d into staging, and used the node command under the project’s directory, ensuring the dependencies were an exact match. Sure enough, we found that lodash’s omit function still worked just fine. But what was the next step?

I said, “change the function to this”.

var transformUser = function (users) {

return users;

// return _.omit(users, '_links');

};

He looked at me like I had just asked him to double check if his computer was still connected to the internet. At first he ignored me, saying he just showed me the users call in Postman, and introduced a couple other leads that he’d been looking into now that we’d ruled out lodash. But I insisted, telling him it’d only take a couple of seconds. He did, and ran the broken call again. To his surprise, and my amusement, something like this came back.

"\"{\"users\":{\"_links\":{\"data\":{...}}},{\"parents\":\"[...]\"}}\""

Apparently, in an attempt to be more RESTful, the api returning the users response had added custom content types that his Postman client either didn’t need or had been configured to use, but had not been updated in the application, which (I suppose) had stricter requirements for using the correct content-type when requesting resources. A few hours later, the upstream api did him a favor and allowed a request to specify application/json as its desired content-type and still receive a json encoded response, fixing the root issue with no code change needed.

Occam’s Razor has many interpretations. Just as you can use it for developing new features (or in this case, api tests!), you can also use it for debugging. Start by validating the most trivial, essential assumptions surrounding the issue before venturing into trickier territory. As much fun as it is to track down and isolate odd behavior in bizarre places (and brag about it after the fact), the reality is that most bugs are far more likely to be pedestrian ones like this.